Hadoop and MongoDB Use Cases

发布于 2015-09-14 15:13:27 | 149 次阅读 | 评论: 0 | 来源: 网络整理

The following are some example deployments with MongoDB and Hadoop. The goal is to provide a high-level description of how MongoDB and Hadoop can fit together in a typical Big Data stack. In each of the following examples MongoDB is used as the “operational” real-time data store and Hadoop is used for offline batch data processing and analysis.

Batch Aggregation¶

In several scenarios the built-in aggregation functionality provided by MongoDB is sufficient for analyzing your data. However in certain cases, significantly more complex data aggregation may be necessary. This is where Hadoop can provide a powerful framework for complex analytics.

In this scenario data is pulled from MongoDB and processed within Hadoop via one or more Map-Reduce jobs. Data may also be brought in from additional sources within these Map-Reduce jobs to develop a multi-datasource solution. Output from these Map-Reduce jobs can then be written back to MongoDB for later querying and ad-hoc analysis. Applications built on top of MongoDB can now use the information from the batch analytics to present to the end user or to drive other downstream features.

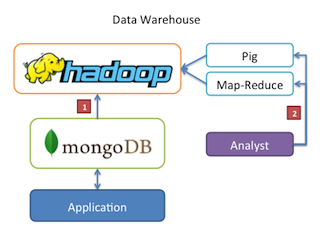

Data Warehouse¶

In a typical production scenario, your application’s data may live in multiple datastores, each with their own query language and functionality. To reduce complexity in these scenarios, Hadoop can be used as a data warehouse and act as a centralized repository for data from the various sources.

In this situation, you could have periodic Map-Reduce jobs that load data from MongoDB into Hadoop. This could be in the form of “daily” or “weekly” data loads pulled from MongoDB via Map-Reduce. Once the data from MongoDB is available from within Hadoop, and data from other sources are also available, the larger dataset data can be queried against. Data analysts now have the option of using either Map-Reduce or Pig to create jobs that query the larger datasets that incorporate data from MongoDB.

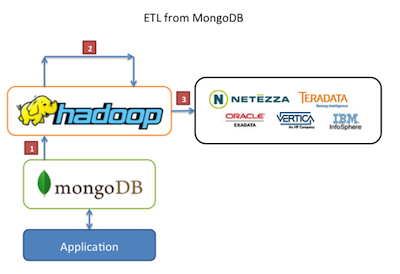

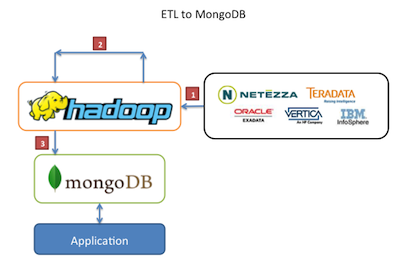

ETL Data¶

MongoDB may be the operational datastore for your application but there may also be other datastores that are holding your organization’s data. In this scenario it is useful to be able to move data from one datastore to another, either from your application’s data to another database or vice versa. Moving the data is much more complex than simply piping it from one mechanism to another, which is where Hadoop can be used.

In this scenario, Map-Reduce jobs are used to extract, transform and load data from one store to another. Hadoop can act as a complex ETL mechanism to migrate data in various forms via one or more Map-Reduce jobs that pull the data from one store, apply multiple transformations (applying new data layouts or other aggregation) and loading the data to another store. This approach can be used to move data from or to MongoDB, depending on the desired result.